Last week I attended 360iDev for the first time. It was tres awesome. One of my favorite sessions was on the accelerate framework from Jeff Biggus (@hyperjeff). One of the biggest barriers to using the framework is a lack of easy to follow documentation/sample code.

I went looking for some sample code on how to set up and use the vImage components of the accelerate framework a few months ago. To my chagrin, there is nothing. Googling just brings you to StackOverflow questions about how to use the framework, with no answers provided. Well, I now provide answers (at least a few).

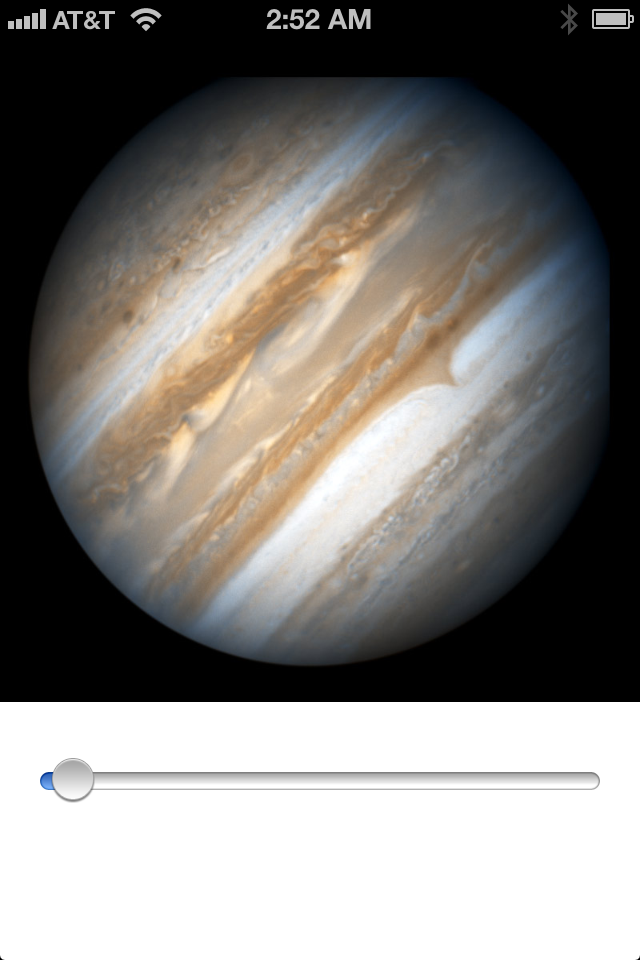

Here’s a look at the sample project:

I’m gonna show you how to create a method that will take in a UIImage and output a blurred version of the image (also as a UIImage). It’s easy to get to and from a CGImageRef via a UIImage, so I’m not going to cover that, but it will be in the sample code provided.

It doesn’t take too many lines of code to use the vImage framework, but it is a C API and without any sample code it can be mysterious as to how to use it (it was to me, anyway). I figured it out by using an example provided by Apple (it was a quartz composer plugin).

First you must include the Accelerate framework in the build phases/link binaries with libraries section. Then you must import it.

I’m going to encapsulate the whole thing in a method called -(UIImage *)boxblurImage:(UIImage *)image. Here’s that code:

-(UIImage *)boxblurImage:(UIImage *)image boxSize:(int)boxSize {

//Get CGImage from UIImage

CGImageRef img = image.CGImage;

//setup variables

vImage_Buffer inBuffer, outBuffer;

vImage_Error error;

void *pixelBuffer;

//create vImage_Buffer with data from CGImageRef

//These two lines get get the data from the CGImage

CGDataProviderRef inProvider = CGImageGetDataProvider(img);

CFDataRef inBitmapData = CGDataProviderCopyData(inProvider);

//The next three lines set up the inBuffer object based on the attributes of the CGImage

inBuffer.width = CGImageGetWidth(img);

inBuffer.height = CGImageGetHeight(img);

inBuffer.rowBytes = CGImageGetBytesPerRow(img);

//This sets the pointer to the data for the inBuffer object

inBuffer.data = (void*)CFDataGetBytePtr(inBitmapData);

//create vImage_Buffer for output

//allocate a buffer for the output image and check if it exists in the next three lines

pixelBuffer = malloc(CGImageGetBytesPerRow(img) * CGImageGetHeight(img));

if(pixelBuffer == NULL)

NSLog(@"No pixelbuffer");

//set up the output buffer object based on the same dimensions as the input image

outBuffer.data = pixelBuffer;

outBuffer.width = CGImageGetWidth(img);

outBuffer.height = CGImageGetHeight(img);

outBuffer.rowBytes = CGImageGetBytesPerRow(img);

//perform convolution - this is the call for our type of data

error = vImageBoxConvolve_ARGB8888(&inBuffer, &outBuffer, NULL, 0, 0, boxSize, boxSize, NULL, kvImageEdgeExtend);

//check for an error in the call to perform the convolution

if (error) {

NSLog(@"error from convolution %ld", error);

}

//create CGImageRef from vImage_Buffer output

//1 - CGBitmapContextCreateImage -

CGColorSpaceRef colorSpace = CGColorSpaceCreateDeviceRGB();

CGContextRef ctx = CGBitmapContextCreate(outBuffer.data,

outBuffer.width,

outBuffer.height,

8,

outBuffer.rowBytes,

colorSpace,

kCGImageAlphaNoneSkipLast);

CGImageRef imageRef = CGBitmapContextCreateImage (ctx);

UIImage *returnImage = [UIImage imageWithCGImage:imageRef];

//clean up

CGContextRelease(ctx);

CGColorSpaceRelease(colorSpace);

free(pixelBuffer);

CFRelease(inBitmapData);

CGColorSpaceRelease(colorSpace);

CGImageRelease(imageRef);

return returnImage;

}

This method is set up to use UIImages because that’s the most convenient. However, inside the method you are converting them into a CGImage (and then back again before returning). A Core Graphics has methods to convert from a CGImageRef to the raw data that you need for the vImage methods.

Once you have a CGImageRef the method sets up a couple of vImage_Buffer objects, a vImage_error, and a pointer to the pixel buffer data (this will be the output buffer’s data).

A vImage_Buffer is a struct that holds the necessary data (including a pointer to the image data itself) for a vImage method. You need two because you can’t do processing in place. You need one vImage_Buffer for the input image and one for the output image.

A vImage_Buffer contains four elements, the .data element is a void* pointer to the image data. The .width, .height, and .rowBytes provide information about the image data. Both the inBuffer and outBuffer objects need to be set up before the actual method can be called.

In order to get set the .data value of the inBuffer struct, you use these lines (

CGDataProviderRef inProvider = CGImageGetDataProvider(img);

CFDataRef inBitmapData = CGDataProviderCopyData(inProvider);

// The other inBuffer attributes are set here

inBuffer.data = (void*)CFDataGetBytePtr(inBitmapData);

You can use the CGImageGet… methods to retrieve the values for the vImage_Buffer objects. The outBuffer can be set up using the same values because it will be the same size.

The only difference is that you need a new array that you create with this line:

pixelBuffer = malloc(CGImageGetBytesPerRow(img) * CGImageGetHeight(img));

Now that both vImage_Buffer objects are set up it’s time to perform the main method,

vImageBoxConvolve_ARGB8888(const vImage_Buffer *src, const vImage_Buffer *dest, void *tempBuffer, vImagePixelCount srcOffsetToROI_X, vImagePixelCount srcOffsetToROI_Y, uint32_t kernel_height, uint32_t kernel_width, uint8_t *backgroundColor, vImage_Flags flags)

First a few things about the vImage methods. The last eight characters, ARGB8888 in this case, is the format the data is in. This data is 4 channel (alpha, red, green, blue), interleaved, 8 bits per channel data.

There are two main kinds of data, planar and interleaved. In a planar data source, the data is not interleaved. With interleaved data, the information goes pixel by pixel, ARGBARGBARGB etc. With planar data types, you’d have 4 vImage_Buffer objects, one for each channel. This can improve performance.

There are also different bit depths, ARGB565, is one example. vImage contains a suite of methods to convert between data types.

The first part of the method is the operation that is being performed. Convolution is an operation where a kernel (a 2 dimensional array of values) is multiplied by each pixels surrounding pixels to obtain that pixels value. It is useful for all kinds of operations, including blur and emboss.

Most of the convolution methods require that you provide a kernel but this one doesn’t. It multiplies each of the neighboring pixels in a box pattern and divides by the total. The result is a fast blur. It does take a size for the kernel. The larger the kernel, the greater the blur.

Now I’ll run through each of the arguments, the first two are the input and output vImage_Buffers. These are passed in by reference.

Next is the temp vImage_Buffer. If you don’t provide one, then the system will handle it. If you perform a bunch of operations it can be more efficient to reuse a temp buffer. I don’t need one, so I’ve passed in NULL.

The next two parameters are x and y offsets. If you only want to perform the operation on a specific region of the input image, you can use the offsets and a smaller output buffer to create a region of interest that’s a subrect of the entire image. I’m passing 0 to use the entire image.

The next two arguments are the width and height of the kernel. Bigger means more blur, and it needn’t be square, you can do a greater blurring in horizontal or vertical dimensions.

The next parameter is the background color. I’m passing in NULL. Finally, a flag field. A convolution requires that you set at least an edge flag. When you get to the edge of the image, the kernel doesn’t have neighboring pixels to use for the calculation, so you have to tell it what to do. I’m using extend, which means that it will create a row or column of pixels based on the last row or column that exists.

Once the convolution is performed, the outBuffer object contains the new image. It needs to be converted back into a CGImageRef and then to a UIImage. There are a number of ways of converting to a CGImageRef, but I used this one. The sample code contains another attempt, but it was leaking and I couldn’t seem to resolve it, so I settled on this method. The alternative seemed to be more direct, but they both seemed to be about the same speed. Here’s the code:

CGColorSpaceRef colorSpace = CGColorSpaceCreateDeviceRGB();

CGContextRef ctx = CGBitmapContextCreate(outBuffer.data,

outBuffer.width,

outBuffer.height,

8,

outBuffer.rowBytes,

colorSpace,

kCGImageAlphaNoneSkipLast);

CGImageRef imageRef = CGBitmapContextCreateImage (ctx);

First, create a colorspace. Create a CGBitmapContext using the data and information from the outBuffer. The fourth parameter means eight bits per pixel.

The last argument is a flag field. The kCGImageAlphaNoneSkipLast means to create a CGImage without an alpha channel, and skip the fourth byte (the alpha byte). This means you’d lose the alpha in the image if you had one.

Once the context is set up you can create the CGImageRef.

The next line just creates a UIImage from a CGImageRef.

The last several lines clean up and release all the memory and objects that were created. Even though the project uses ARC, both Core Graphics and vImage are C APIs that use Core Foundation objects. That means that you must manage the memory yourself.

That’s it. I hope this can be of some help to those looking for examples of how to use vImage. I’m not going to explain how the slider callback method works (it just calls this method and passes a different size argument), it should be easy to follow for most of you. If you need help, comment.

Here’s what the hubble image looks like blurred.

There are a bunch of other things you can do besides a convolution with the vImage framework. You can do color manipulations. You can do scaling, rotating, translating, etc. You can do histogram operations (either get a histogram for an image or match one image’s histogram to another). And there’s more.

The vImage programming guide is actually pretty good for explaining how the framework can be used and what the methods do. The part that was unclear to me, was how to get image data in and out. If you are eager to use the framework, but you get stuck, post what you are trying to do in the comments and I’ll try to help you if I can.

Here’s the sample project I created while figuring this out. It also contains a method for matching one image’s histogram to another. It was created using the iOS 6 GM, but doesn’t require iOS 6, so just change the setting (Build Settings->Base SDK) if you aren’t using iOS 6 toolchain yet.

I am interested in comparing the performance of the vImage framework against the GPUImage framework, but that will have to wait for another post.

Pingback: Эффект Blur в приложениях iOS

Pingback: Blur effect in iOS applications

As you mentioned, it is not clear to me how to get the individual pixel values. I have tried using typedef uint8_t Pixel_8888[4] myPix from vImage_Types.h (and Byte aPixel[4]) like this:

for(int i = 0; i < width; i++)

{

indexI = i*bytesPerPixel;

for(int j = 0; j < height; j++)

{

indexJ = j*bytesPerPixel;

myPix[0] = (Byte)&inBuffer.data[(indexJ*width)+indexI];

myPix[1] = (Byte)&inBuffer.data[(indexJ*width)+indexI+1];

myPix[2] = (Byte)&inBuffer.data[(indexJ*width)+indexI+2];

myPix[3] = (Byte)&inBuffer.data[(indexJ*width)+indexI+3];

}

}

but I am not properly accessing the data. I want to insert a cropped portion of one image into another, then I want to do further image processing. I was able to do it when I grab the buffer from a view with CGImageCreateWithImageInRect, CGBitmapContextCreate, and CGContextDrawImage. Except when I scale the image with CGAffineTransformScale. Then I have the same problem of getting a blank image insertion. So, I thought I would try using vImage, but I can't even get the unscaled image like this. Any clues?

When I compare values I collect from the myPixel to pixel values from the same pixel in an unsigned char buffer I get from the CGCContextDrawImage, they are not the same. Am I getting part of an address in myPix?

It appears I am inserting the address in myPix (I don’t have much experience with Objective C), but that is how it was illustrated in

http://lists.apple.com/archives/quartzcomposer-dev/2012/Nov/msg00025.html

Making some progress with:

myPixaddrs = (unsigned char*)&inBuffer.data[(indexJ*(int)(frgndImgView.image.size.width*fltScale))+indexI];

bckgndData[(indexJ*(int)(image.size.width))+indexI] = *myPixaddrs;

myPixaddrs = (unsigned char*)&inBuffer.data[(indexJ*(int)(frgndImgView.image.size.width*fltScale))+indexI+1];

bckgndData[(indexJ*(int)(image.size.width))+indexI+1] = *myPixaddrs;

myPixaddrs = (unsigned char*)&inBuffer.data[(indexJ*(int)(frgndImgView.image.size.width*fltScale))+indexI+2];

bckgndData[(indexJ*(int)(image.size.width))+indexI+2] = *myPixaddrs;

myPixaddrs = (unsigned char*)&inBuffer.data[(indexJ*(int)(frgndImgView.image.size.width*fltScale))+indexI+3];

bckgndData[(indexJ*(int)(image.size.width))+indexI+3] = *myPixaddrs;

I can pull out the image from the inBuffer.data, but I’m back to my original problem. If I rescale or rotate the image with CGAffineTransformScale or CGAffineTransformRotate, I can not grab the image data.

Hi,

This was a nice article.

I was hoping you could help me with an issue?

I have read Apple’s vImage guide and tried the code in example 2-1 which doesn’t seem to work (the first code example).

I would like to be able to create other kernels, such as sharpen, emboss etc and process the image with them.

Could you please explain how I would go about this, as the only example code I’ve seen uses blur and doesn’t appear to use a kernel (more like a built in kernel function).

For example – in Apple’s code, the following:

{-2, -2, 0, -2, 6, 0, 0, 0, 0}

is an emboss kernel.

How would I go about implementing that kernel in my code?

Many Thanks.

Awesome code! I would make one small change to account for the fact the images taken with the camera are often rotated. Change line 62 to this to have the returned image have the same scale and orientation as the source:

UIImage *returnImage = [UIImage imageWithCGImage:imageRef scale:image.scale orientation:image.imageOrientation];

This works great if the source image is from the photo library, but if the image was created using UIGraphicsBeginImageContext, this function seems to swap the red and blue components. Any ideas how to fix this?

Figured out my problem:

CGImageGetBitmapInfo(img) & kCGBitmapByteOrderMasktells you the byte order.If it’s BGR instead of RGB, you can change it using

vImageMatrixMultiply_ARGB8888to swap the components.Hi all,

The code above is working, but not with images which have Alpha Channel = NO.

What do you have to change to get the image blur with Alpha Channel = NO?

Hope you can help me, because I’m really stuck.

Thanks in advance!

Error message:

CGBitmapContextCreate: invalid data bytes/row: should be at least 1280 for 8 integer bits/component, 3 components, kCGImageAlphaNoneSkipLast.

Log:

(lldb) p outBuffer

(vImage_Buffer) $0 = {

data = 0x0d9cd000

height = 960

width = 640

rowBytes = 640